Specification V1.1.4

14 October 2013

- Tae-Gil Noh, Heidelberg University <noh@cl.uni-heidelberg.de>

Sebastian Pado, Heidelberg University <pado@cl.uni-heidelberg.de>

Asher Stern, Bar Ilan University <astern7@gmail.com>

Ofer Bronstein, Bar Ilan University <oferbr@gmail.com>

Rui Wang, DFKI <wang.rui@dfki.de>

Roberto Zanoli, FBK <zanoli@fbk.eu>

Table of Contents

- 1. Introduction

- 2. Elements of the EXCITEMENT platform

- 3. Linguistic Analysis Pipeline (LAP)

- 4. Common Interfaces of the Entailment Core

- 4.1. Requirements for the Entailment Core

- 4.2. EDA Interface

- 4.3. Auxiliary Layer for EDAs

- 4.4. Common functionality of components: The

Componentinterface - 4.5. Interface for Scoring Components

- 4.6. Interface of distance calculation components

- 4.7. Interface of lexical knowledge components

- 4.8. Interface of syntactic knowledge components

- 4.9. Concurrent Processing

- 4.10. Initialization and metadata check

- 5. Common Data Formats

- 6. Linguistic Analysis Pipeline

- 7. Further Recommended Platform Policies

- 8. References

Appendixes

- A. Type Definition: types for general linguistic analysis

- B. Type Definition: types related to TE tasks

- C. Entailment Core Interfaces

- C.1.

interface EDABasicand related objects - C.2.

interface SinglePairProcessHelper - C.3.

interface EDAMulti* - C.4.

class MultipleTHModeHelper - C.5.

interface Components - C.6.

interface DistanceCalculationand related objects - C.7.

class LexicalResourceand related objects - C.8.

interface SyntacticResourceand related objects

- C.1.

- D. Supported Raw Input Formats

Identifying semantic inference relations between texts is a major underlying language processing task, needed in practically all text understanding applications. For example, Question Answering and Information Extraction systems should verify that extracted answers and relations are indeed inferred from the text passages. While such apparently similar inferences are broadly needed, there are currently no generic semantic inference engines, that is, platforms for broad textual inference.

Annotation tools do exist for narrow semantic tasks (i.e. they consider one phenomenon at a time and one single fragment of text at time). Inference systems assemble and augment them to obtain a complete inference process. By now, a variety of architectures and implemented systems exist, most at the scientific prototype stage. The problem is that there is no standardization across systems, which causes a number of problems. For example, reasoning components cannot be re-used, nor knowledge resources exchanged. This hampers in particular the pick-up of textual entailment as a "standard" technology in the same way that parsing is used, by researchers in semantics or NLP applications.

EXCITEMENT has two primary goals (see the project proposal for details):

Goal A: Develop and advance a comprehensive open-source platform for multi-lingual textual inference, based on the textual entailment paradigm.

Goal B: Inference-based exploration and processing of customer interactions at the statement level, for multiple languages and interaction channels.

where Goal B builds strongly on the development of the platform for Goal A. We envisage the role of this platform to be similar to the one played by MOSES in the Machine Translation community -- that is, as a basis for reusable development. For these goals, we work towards the following properties:

Algorithm independence. The platform should be able to accommodate a wide range of possible algorithms (or more generally, strategies) for entailment inference.

Language independence. Similarly, the platform should be, as far as possible, agnostic to the language that is processes so that it can be applied to new languages in a straightforward manner.

Component-based architecture. We base our approach onto the decomposition of entailment inference decisions into a set of more or less independent components which encapsulate some part of the entailment computation -- such as the overall decision and different kinds of knowledge. Since components communicate only through well-defined interfaces, this architecture makes it possible to combine the best possible set of components given the constraints of a given application scenario, and the re-use of components that have been developed.

Versatility. The platform should be configurable in different ways to meet the needs of different deployment scenarios. For example, for deployment in industrial settings, efficiency will be a primary consideration, while research applications may call for a focus on precision.

Clear specification and documentation.

Reference implementation. We will provide an implementation of the platform that covers a majority of proposed entailment decision algorithms together with large knowledge resources for English, German, and Italian.

For reasons of practicality, the implementation will be based on three preexisting systems for textual entailment. These systems are:

The role of this document is to meet the third goal -- to provide a specification of the EXCITEMENT platform. Our goal for the specification is to be as general as possible (in order to preserve the goal of generality) while remaining as specific as necessary (to ensure compatibility). At a small number of decisions, we have sacrificed generality in order to keep the implementation effort manageable; these decisions are pointed out below.

The structure of this document mirrors the aspects of the EXCITEMENT platform that require specification. These aspects fall into two categories: actual interfaces, and specification of meta-issues.

Regarding interfaces, we have to specify:

The linguistic analysis pipeline which creates some data structure with linguistic information which serves as the input for the actual entailment inference (Section 3).

The interfaces between components and component groups within the actual entailment computation (Section 4).

As for meta-issues, we need to address:

The shape of the overall architecture and the definition of terminology (Section 2).

Further standardization issues such as configuration, shared storage of resources, error handling etc. (Section 5)

Currently outside the scope of this document is the specification of the transduction layer. This layer is part of the work towards Goal B. It translates between the queries posed by the industrial partners' use cases and entailment queries that can be answered by the open platform developed for Goal A. The transduction layer will be described in a separate document, to be released as Deliverable 3.1b.

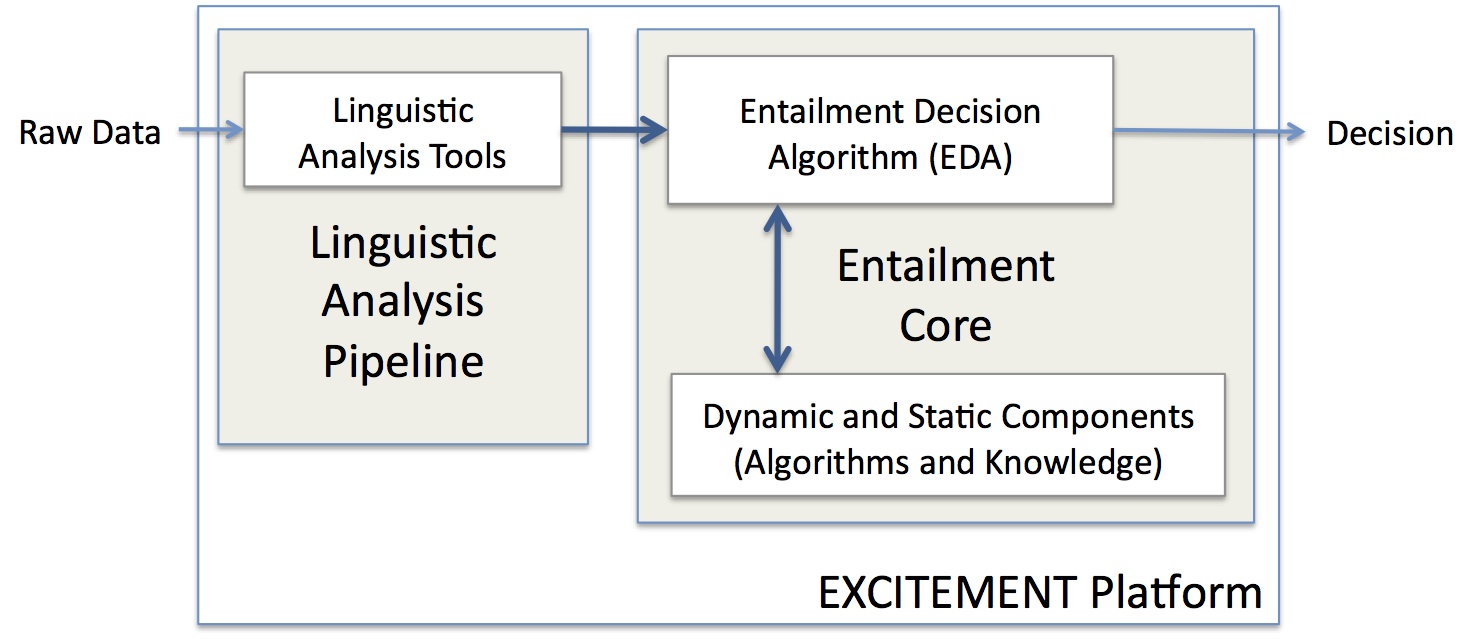

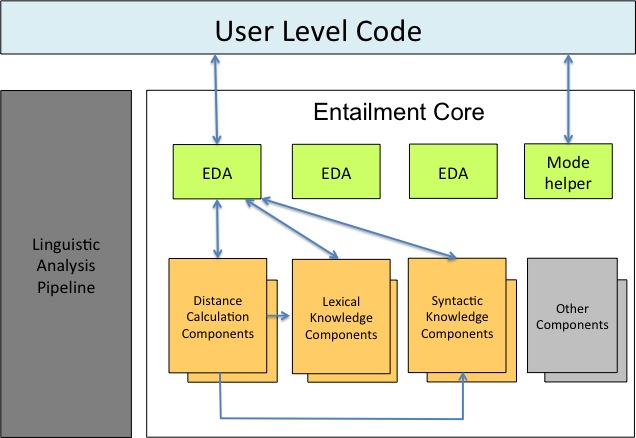

As sketched, we assume that it is beneficial to decompose the recognition of textual entailment into individual components which are best developed and independent of each other. This approach gives rise to the overall system architecture that is shown in Figure 1, “The EXCITEMENT platform”.

The most important top-level distinction is between the Linguistic Analysis Pipeline (LAP) and the Entailment Core. We separate these two parts in order to (a), on a conceptual level, ensure that the algorithms in the Entailment Core only rely on linguistic analyses in well-defined ways; and (b), on a practical level, make sure that the LAP and the Entailment Core can be run independently of one another (e.g., to preprocess all data beforehand).

Since it has been shown that deciding entailment on the basis of unprocessed text is a very difficult endeavor, the Linguistic Analysis Pipeline is essentially a series of annotation modules that provide linguistic annotation on various layers for the input. The Entailment Core then performs the actual entailment computation on the basis of the processed text.

The Entailment Core itself can be further decomposed into exactly one Entailment Decision Algorithm (EDA) and zero or more Components. An Entailment Decision Algorithm is a special Component which computes an entailment decision for a given Text/Hypothesis pair. Trivially, each complete Entailment Core is an EDA. However, the goal of EXCITEMENT is exactly to identify functionality within Entailment Cores that can be re-used and, for example, combined with other EDAs. Examples of functionality that are strong candidates for Components are WordNet (on the knowledge side) and distance computations between text and hypothesis (on the algorithmic side). Both of these can be combined with EDAs of different natures, and should therefore be encapsulated in Components.

In order to use the EXCITEMENT infrastructure, a user will have to configure the platform for his application setting, that is, for his language and his analysis tools (on the pipeline side) and for his algorithm and components (on the entailment core side).

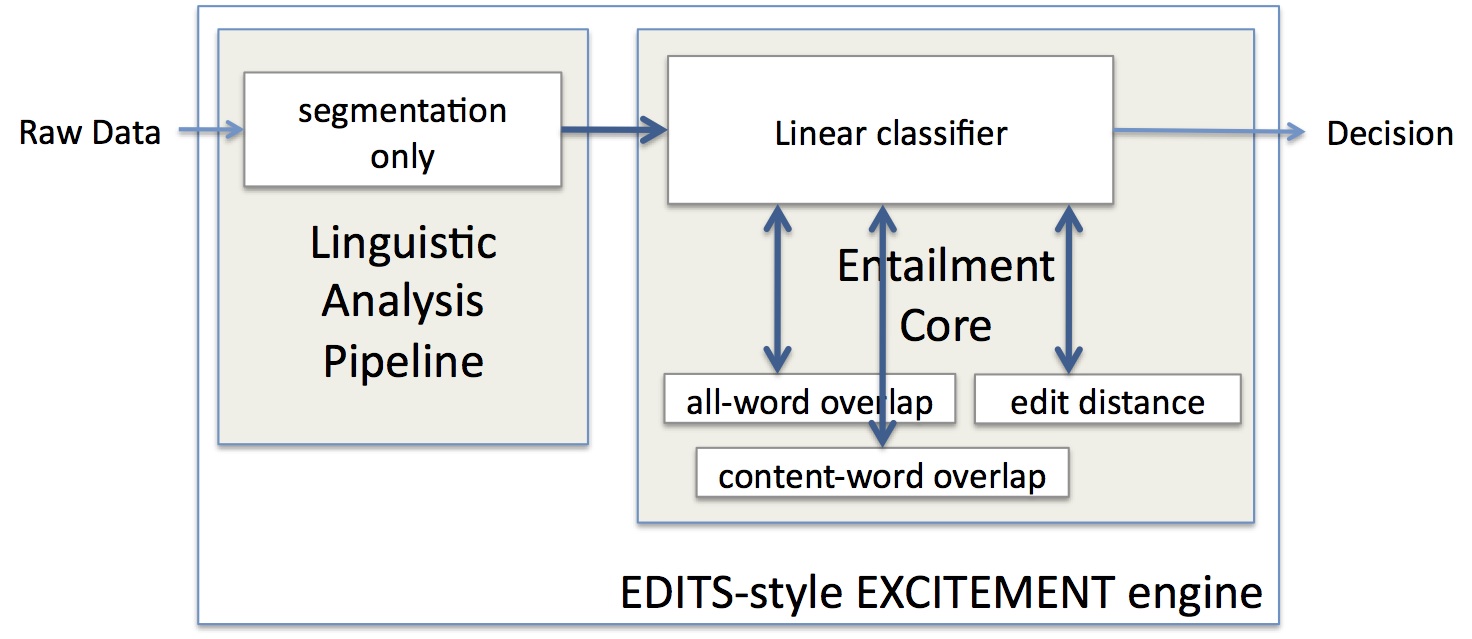

For an example, consider Figure Figure 2, “An EDITS-style EXCITEMENT engine”. It shows an engine instantiating the EXCITEMENT platform that mirrors the functionality of the EDITS system. The linguistic analysis pipeline remains very basic and only provides fundamental tasks (sentence segmentation and tokenization). The components are different variants of simple distance and overlap measures (at the string token level) between text and hypothesis. The EDA is a simple linear classifier which computes weighted output of the components into a final score and compares it against a threshold.

This is a supervised learning setup -- both the weights for components and the threshold must be learned. Therefore, training must be part of the EXCITEMENT platform's functionality.

Note also that although this engine is fairly generic, it is not completely language-independent. Language-specific functionality is definitely part of the content word overlap component (at least in the form of a "closed class word list") and potentially also in the linguistic analysis pipeline, for example in the form of tokenization knowledge. For this reason, the LAP must enrich the input with meta data that describes its language, as well as the performed preprocessing steps so that the Entailment Core can verify the applicability of the current Engine configuration to the input data.

The key words must, must not, required, shall, shall not, should, should not, recommended, may, and optional are to be interpreted as described in [RFC 2119]. Note that for reasons of style, these words are not capitalized in this document.

- Entailment Platform

The totality of the infrastructure provided by the EXCITEMENT project for all languages and entailment paradigms. The Platform can be configured into an Entailment Engine.

- Entailment Engine

A configured instance of the Entailment Platform that makes entailment decisions for one language. An Entailment Engine consists of a Linguistic Analysis Pipeline and an Entailment Core.

- Linguistic Analysis Pipeline (LAP)

A set of linguistic tools that analyze a given set of Text-Hypothesis pairs, typically performing steps such as sentence splitting, POS tagging, Named Entity Recognition etc.

- Entailment Core

A part of an Entailment Platform that decides entailment for a set of Text-Hypothesis pairs that have been processed in the Linguistic Analysis Pipeline. The Entailment Core consists of exactly one Entailment Decision Algorithm and zero or more Components.

- Entailment Decision Algorithm (EDA)

An Entailment Decision Algorithm is a Component which takes a Text-Hypothesis pair and returns one of a small set of answers. A complete entailment recognition system is trivially an EDA. However, in the interest of re-usability, generic parts of the system should be made into individual Components. Entailment Decision Algorithms communicate with Components through generic specified interfaces.

- Component

Any functionality that is part of an entailment decision and which is presumably reusable. This covers both "static" functionality (that is, knowledge) and "dynamic" functionality (that is, algorithms). A typology of Components is given in Section 4.1.3, “Identifying common components and interfaces ”.

- User code

Any code that calls the interfaces and utilizes the types defined in this specification. This includes the "top level" entailment code that calls the LAP and the EDA to apply an engine to an actual data-set.

- Interface

A set of methods and their signatures for a class that define how the class is supposed to interact with the outside world

- Type

We use the term "type" for classes that denote data structures (i.e. which have a representational, rather than algorithmic, nature).

- Contract

Further specification on how to use particular interfaces and types that goes beyond signatures. For example, rules on initialization of objects or pre/postconditions for method calls.

The goal of this document is to define interfaces that allow the use of a wide range of externally developed linguistic analysis modules and inference components within the EXCITEMENT platform. An issue that arises in practice is that externally developed software will come with its own licenses, which may be problematic. (NB. This question also concerns libraries that might be used in EXCITEMENT, for example regarding data input/output or configuration.)

This issue requires more future discussion among partners. Our momentary analysis of the situation is that we need to distinguish between the two major application scenarios of EXCITEMENT, namely as the open-source platform and in industry partners' systems.

Open-Source Platform. Within the open-source platform, externally developed modules will generally be admissible as long as their license permits academic use. We will however only be able to package modules as part of the platform that permit redistribution; other modules will have to be installed separately by the end users.

Which license the open-source platform itself will be released under is still undecided.

Industrial Systems. We will need to adopt a more restricted policy for the use of EXCITEMENT in the industrial systems. Only software that permits commercial use is admissible. Major licenses that meet this requirement are the Apache license; the BSD license; CC licenses that are not part of the NC family; the MIT license. The GPL is presumably unsuitable since it requires distribution of the complete system under the GPL. Under the assumption that the resulting systems will be used only in-house at the industrial partners, at least for the time being, the question of whether redistribution is permissible does not arise here.

This section describes the specification (interfaces and exchange data structure) of the Linguistic Analysis Pipeline.

This subsection describes the requirements for two aspects of the LAP: First, the user interface of the LAP. Second, the type (i.e., data structure) that is used to exchange data between the LAP and the Entailment Core.

Separation between LAP and entailment core. (This is a global requirement.)

Language independence. The pipeline should not be tied to properties of individual languages.

"One-click analysis". The pipeline should be easy to use, ideally runnable with one command or click.

Customizability. The pipeline should be easily extensible with new modules.

Easy configuration. The pipeline should be easy to (re)configure.

Language independence. The data structure should be able to accommodate analyses of individual languages.

Linguistic formalism independence. The data structure should be independent of specific linguistic theories and formalisms.

Extensible multi-level representation. The data structure should be extensible with new linguistic information when the LAP is extended with new modules.

Support for in-memory and on-disk storage. The data structure should be serializable so that LAP and Entailment Core can be run independently of one another.

Metadata support. The data structure should encode metadata that specify (a) the language of the input data and (b) the levels and type of linguistic analysis so that the Entailment Core can ensure that the input meets the requirements of the current Engine configuration.

An option which we initially considered was the CoNLL shared task tabular format (http://ufal.mff.cuni.cz/conll2009-st/task-description.html). We rejected this possibility because it could not meet several of our requirements. (1), it does not specify a pipeline, just an interchange format. (2), it is extensible but at the same time fairly brittle and unwieldy for more complex annotations (cf. the handling of semantic roles in CoNLL 2009 which requires a flexible number of columns). (3), it only specifies an on-disk file format but no in-memory data structure. (4), no support for metadata.

UIMA (Unstructured Information Management Applications) is a framework that started as a common platform for IBM NLP components. It evolved into a well-developed unstructured information processing platform, which is now supported by Apache Foundation as an open source framework. It has been used by many well known NLP projects and has healthy communities in both academic and commercial domain.

UIMA provides a unified way of accessing linguistic analysis components and their analysis results. All analysis modules are standardized into UIMA components. UIMA components shares a predefined way of accessing input, output, configuration data, etc. Within UIMA, it is easy to setting up a composition of analysis components to provide new pipelines, or adopt a newly developed analysis module to already existing pipelines.

On the top level, the unification of UIMA components is achieved on two levels.

The first is unification of components behavior. Instead of providing different APIs for each analysis module, UIMA components all shares a set of common methods. Also, calling and accessing of a component is done by the UIMA framework, not by user level code. Users of a component do not directly call the component. Instead, they request the UIMA framework to run the component and return the analysis result. The framework then calls the component with predefined access methods contracted among UIMA components. This common behavior makes it possible to treat every component as pluggable modules, and enables the users to establish two or more components to work together.

The second unification is done with common data representation. If the inputs and outputs of each component are not compatible, then the unification of components' behaviors is not really meaningful. In UIMA, the compatibility of input and output is provided by the Common Analysis Structure (CAS) [UIMA-CAS]. One can see CAS as a data container, which holds original texts with various layers of analysis results that annotates the original text. As a CAS is passed through analysis engines, additional annotation layers are stacked into the CAS. Input and output of every components are standardized in terms of the CAS. Every component works on the CAS as the input, and adds something to the CAS as output. Thus, within UIMA, each components capabilities can be described in terms of CAS; what it needs in the CAS to operate (for example, a parser might need the result of a POS tagging step), and what it will add on the CAS after the processing (like a dependency parse tree).

CAS itself is a container. The actual content (analysis result) is defined by the CAS type systems. UIMA provides a generic type system that is strong enough to describe various annotation layers (like POS tagging, parsing, coreference resolution, etc). Definition and usage of common type system is one important aspect of using CAS. The UIMA framework itself only provides a handful of default types like strings, and double values. Additional types must be proposed or adopted for actual systems; we can however cover most of our requirements with existing type systems.

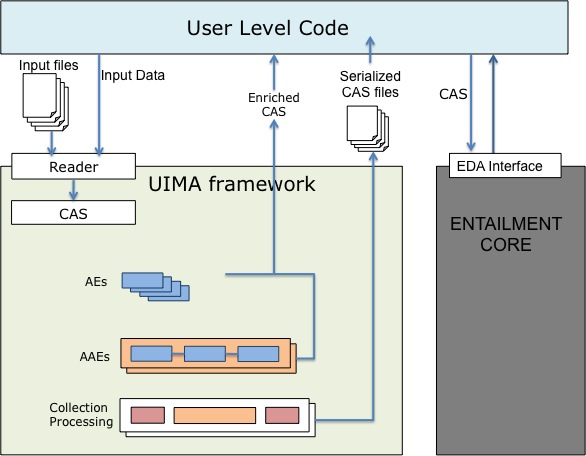

The following figure shows the Apache UIMA Java implementation as the LAP of EXCITEMENT. It provides a unified way of calling linguistic analysis components for user code.

UIMA comes with various components and tools. However, in this specification, we will only review the components that an EDA developer should provide to the EXCITEMENT LAP as UIMA components. They are also the components that EXCITEMENT top level users can access from the linguistic analysis pipeline. Figure 3, “UIMA as the linguistic analysis pipeline of EXCITEMENT ” shows them. We first provide generic definition of AE, AAE and collection processing. Then we will state what EDA implementers should provide to realize an EXCITEMENT LAP.

AE. Analysis modules of UIMA are called as Analysis Engines (AEs). For example, individual analysis components like POS tagger, parser, NER annotators are AEs. All AEs share a set of contracted methods, and they will be accessed by the UIMA framework. All analysis results of an AE are returned to the caller as added layers on a CAS.

AAE. UIMA also provides analysis pipelines that are called Aggregated Analysis Engines (AAEs). An AAE is defined by composing multiple AEs. For example, a pipeline that outputs parsing results can be composed by subsequent calls of a sentence splitter AE, a POS tagger AE, and a parser AE. UIMA provides a standard way to compose analysis pipelines without changing anything on the individual analyzers.

Collection Processing (and UIMA Runtime). A linguistic analysis pipeline often needs to work on a collection of documents and produce a set of enriched documents. For example, a user might want to optimize parameters of a TE system with repeated experiments on the same dataset. In this case, repeating the same linguistic preprocessing would be redundant. AAEs do not provide any capability for accessing files or processing collections. It is the responsibility of the AAEs caller to recognize such cases and avoid redundant calls. To guarantee that this happens in a uniform manner, UIMA provides the concepts of UIMA runtime and collection reader.

A collection reader is a UIMA component that is specialized in data reading and CAS generation. Compared to an AE, it shares a different set of contractual methods. A collection reader reads an input (document) and generates a CAS that can be processed by AEs.

A UIMA runtime is a runner mechanism that enables running of AEs. If a user calls an AE directly (via the framework), the component is actually running in the caller's space (same process, thread). In this case, running AEs on multiple files would still be the caller's responsibility. A UIMA runtime is designed to take over that responsibility. A runtime is capable of iterating over a set of documents in its own space. Apache UIMA and the UIMA community provide various runtimes, from simple single thread runtime to asynchronous distributed runtimes. Examples for UIMA runtimes are UIMA CPE/CPM (collection processing engine / collection processing manager), UIMA AS (Asynchronous scale-out), UIMAfit simple pipeline, and the UIMAfit hadoop runtime.

The LAPs of EXCITEMENT exist primarily for processing textual entailment data. As a secondary goal, the LAPs need to provide linguistic analysis capabilities for the user level code and the transduction layer. With these goals in mind, it is possible to formulate more focused requirements for EXCITEMENT LAP components. An EXCITEMENT LAP should provide the following capabilities:

Calling individual analysis components: A single AE represents an individual linguistic analysis component, like tagger, tokenizer, or parser. The user code can call an AE, or a set of AEs to utilize the analysis capability provided by each component.

Calling a prepared pipeline: A specific EDA needs a specific level of analysis. For example, one EDA might need "tokenizer - POS tagger" analysis sequence, and another EDA might need "tokenizer - POS tagger - parser - NER" sequence. UIMA AAE can provides this specific analysis sequence that is optimized for a specific EDA. Note that AAEs do not (and should not) know about the concepts of Text and Hypothesis. AEs and AAEs treat given CAS (or CAS view) as plain running text, and simply process a sequence of analysis.

Calling a collection processing for EDAs: The EDA interface requires a CAS that is prepared for EDAs. This will include types and views specially designed to represent textual entailment problems. AEs and AAEs do not know about TE-related types or setups. Such entailment specific types are prepared by a "collection processor". The flow of a collection processor starts with a "collection reader". A collection reader reads one (or more) textual entailment problem input (like RTE data), and generates a UIMA CAS with views and types defined for entailment problems. Then, the collection processor calls one configured AAE for each view. For example, if the processor calls an AAE prepared for some EDA (say EDA-A), the CAS is now ready to be processed by "EDA-A". If the views are processed by an AAE prepared for "EDA-B", the resulting CAS (or CASes) is prepared for "EDA-B".

At the time of writing of this specification (V1.0), the EXCITEMENT consortium has made the following decision regarding the adoption of UIMA within the linguistic analysis pipeline:

We will definitely adopt the UIMA type system.

Given the existence of working pipelines that form part of the three existing systems, we do not immediately adopt a stance with regard to the adoption of the UIMA runtime system. The minimal level of interoperability that these pipelines must provide at the moment is that they conform to the LAP input (Section 5.2, “Input file format ”) and output (CAS) specifications.

For the future (2013/14), we see a number of graded options regarding UIMA adoption:

Status quo (minimal interoperability)

Wrapping of pipelines into AAEs (basic use of UIMA runtime)

Decomposition of pipelines into AEs (advanced use of UIMA runtime)

The choice among these future strategies will be taken when consortium partners have had more practical experience in working with UIMA.

More formally, the following requirements fall out of these decisions:

All pipeline implementations must utilize the common type system. The common type system is described in Section 3.3, “Type Systems”. The EDA implementer may extend the type system to meet particular information needs. This is discussed in Section 3.3.5, “Extending existing types”.

The implementer must provide linguistic pipelines that can process T-H pairs and generate proper CAS structures that can be consumed by the EDA. This is specified in Section 3.4.2, “Providing an analysis pipeline”.

It is recommended that the implementer provides the ability to pre-process a set of documents and produce a set of properly serialized CAS structures that can be consumed by the EDA. This is specified in Section 3.4.3, “Providing serialized outputs for a set of documents”.

The implementer can provide individual linguistic analysis components as individual AEs. This is specified in Section 3.4.1, “Providing individual analysis engines (AEs)”. In some cases, providing individual engine might not be desirable. Treatment of such cases are described in subsection Section 3.4.4, “An alternative: Independent pipelines with CAS output”.

This specification provides no further information on UIMA. Please consult external UIMA documentation such as [UIMA-doc] to learn more about the UIMA framework and on how to build UIMA components.

The annotation layers of a CAS are represented in terms of UIMA types. To ensure the compatibility of components and their consumers, it is vital to have a common type system. UIMA community already has well developed type systems that cover generic NLP tools. Two notable systems are DKPro [DKPRO] and ClearTK [CLEARTK]. They cover almost all domains of common NLP tools, including dependency and constituent parsing, POS tagging, NER, coreference resolution, etc. Their type systems are also fairly language-independent. For example, the POS tags of DKPro have been used for taggers of Russian, English, French, and German, and its parse annotations have been used in Spanish, German, and English parsers.

Types needed for EXCITEMENT will be defined based on the existing types of the UIMA community. For generic linguistic analysis results, we adopt many types from DKPro. The UIMA types of DKPro components have been used in many applications, and they are relatively well matured types. We also add a few new types that were absent in DKPro, like temporal annotations and semantic role labels. The adopted and proposed UIMA types for generic linguistic analysis are described in Section 3.3.3, “Types for generic NLP analysis results”, and their type listings are included in Appendix A, Type Definition: types for general linguistic analysis.

This specification also defines some UIMA types that are designed to represent textual entailment problems and related annotations. They are including text and hypothesis marking, predicate truth annotation, event annotation, etc. They are described in Section 3.3.4, “Additional Types for Textual Entailment”, and their actual type definitions are included in Appendix B, Type Definition: types related to TE tasks .

Once a type is defined and used in implementations, the cost of changing to another type system is prohibitive. Therefore, the choice of types is a serious decision. At the same time, the type system has to allow for a certain amount of flexibility. First, it needs to permit users to extend it without impacting other components that use already existing types. It even permits old components that are not aware of new types to work with the data annotated with components that work with extended types (see Section 3.3.5, “Extending existing types”). Second, types are generally evolving with the community. In this sense, we must assume that our type system (especially the parts defined specifically for textual entailment problems) may face some changes driven by practical experiences with the EXCITEMENT open platform. In contrast, we assume that the adopted generic NLP types of DKPro constitute a relatively matured system, since it has been around for some years.

Artifact is a UIMA concept which describes the raw input. For example, a document, a web page, or a RTE test dataset, etc, are all artifacts. For the moment, let's assume that we have a web page as an artifact, and the goal is to provide various analysis on the text of the web page.

The generic analysis tools (like POS tagger, parser, etc) do not know about HTML tags of the webpage. And it is not desirable to add such specific tag support to generic tools. In UIMA, generic tools can be used without modifications for such cases, by adopting Views. For the previous example, an HTML detagger module can generate a plain text version of the webpage. This can be regarded as a new perspective (view) on the original data (artifact). In UIMA this new view can be introduced into a CAS. Once the view is introduced in the CAS, subsequent analysis like POS tagging and parsing can process the plain text view of the web page without knowing that it is from a webpage.

Subject OF Analysis (SOFA) is another UIMA concept. It is referring the data subject that is associated with a given view. In the website example, it is the plain text version of the web page. One view has one SOFA, and one CAS can have multiple views. Views (and Sofas) can have names that can uniquely identify them among a given CAS. Annotations of CAS generally have a span: beginning position and the end positing of the annotated text. Such spans are expressed as offset of each SOFA. Thus, annotators (individual analysis engines) are annotating SOFAs, instead of artifacts directly.

A pictorial example of CAS, views and annotations are given after introduction of the types for textual entailments, in Figure 4, “Example of an entailment problem in CAS”.

The UIMA framework itself defines only a handful of default types. They are including primitive types (like string, numerical values), and basic annotation types.

Primitive types only represent simple values, but user-defined types can express arbitrary data by composing primitive types, and other types. For example, a token type might have a string (primitive type) for lemma, another string for POS, and two numbers for starting position and end position of the token. Within UIMA CAS, this list of values are called as feature structures. Each feature in the feature structure has a name and a type, thus only a specific type can be pointed by the feature.

UIMA type system is based on single-parent inheritance system. Each type must have a one super-type, and it gets all features from the super-type.

The followings are non-exhaustive list of the default types that are used in the adopted and proposed type system of the specification.

uima.cas.TOP: This type is the top type of the CAS type hierarchy. All CAS types are subtypes ofTOP. It does not have any feature.uima.cas.String: one of the primitive types. By primitive types, it means that it is not part of normal type hierarchy. Examples of other primitive types are includingBoolean,Integer,Double, etc. String is represented as java string (thus Unicode 16 bit code).uima.tcas.Annotation: This type supports basic text annotation ability by providing span: starting and ending of this annotation. It has two features,beginandend. They areuima.cas.Integer, and associated with a character position of a SOFA. Most of the annotations used in the adopted type system is a subtype of this type.uima.tcas.DocumentAnnotation: This is a special type that is used by the UIMA framework. It has a single featurelanguage, which is a string indicating the language of the document in the CAS.

Fully detailed information on CAS, and types of default CAS can be found in the CAS reference section of the UIMA reference document [UIMA-CAS].

This section outlines adopted and proposed UIMA types for generic NLP analysis results. The full list of the types are given in the Appendix A, Type Definition: types for general linguistic analysis.

In this section, the string DKPRO is used as a short-form of de.tudarmstadt.ukp.dkpro.core.api.

Segmentation represents general segments of document, paragraphs, sentences and tokens. They are defined as extension of UIMA annotation types. Paragraphs and sentences are simple UIMA annotations (uima.tcas.Annotation) that mark begin and end. Token annotation holds additional information, including the lemma (string), stem (string) and POS (POS type).

See DKPRO.segmentation.* types.

POS types are defined as extension of UIMA annotation type (uima.tcas.Annotation). It has feature of PosValue (string). This string value holds the unmapped (original) value that is generated by the POS tagger. Common POS types are defined as inherited types of this POS type. They are including PP, PR, PUNK, V, N, etc. To see all common POS types, see DKPRO.lexmorpth.types.pos and the inherited types (DKPRO.lexmorph.types.pos.*).

Note that POS types have inherited structures --- like NN and NP are subtype of N, etc. Thus, if you access all type.N, you will also get all inherited annotations (like NN, NP), too.

Also note that the POS type can be extended to cover other (more detailed, or domain-specific) tagsets. See also Section 3.3.5, “Extending existing types”.

DocumentMetadata holds meta data of the document, including document ID and collection ID. It is a sub type of UIMA Document annotation, which holds language and encoding of the document. See DKPRO.metadata.type.DocumentMetaData.

Types related to NER data are defined as subtype of UIMA annotation type. It has string feature named "value" that holds output string of NER annotator. Actual entity types are mapped into subtypes that represents organizations, nationality, person, product, etc. See DKPRO.ner.type.NamedEntity and its sub types (DKPRO.ner.type.NamedEntity.*).

Constituency parsing results can be represented with

DKPRO.syntax.type.constituent. It has a set of types

related to constituency parsing. constituent type

represent a parse node, and holds required information such as

parent (single node), children (array), and

constituentType. constituentType field holds the raw data

(unmapped value) of the parser output, and the mapped value are

represented with types, such as type.constituent.DT,

type.constituent.EX, etc. See

DKPRO.syntax.type.constituent.Constituent and its sub

types (DKPRO.syntax.type.constituent.*).

Note that the EXCITEMENT platform has adopted dependency parse trees as its canonical representation for syntactic knowledge (as described in Section 4.8, “Interface of syntactic knowledge components ”). EDA implementers can use constituency parsers and their results within their code, but constituency parse trees cannot be used together with EXCITEMENT syntactic rule-bases, unless they are converted to dependency parse trees.

Dependency parse results are represented with type DKPRO.syntax.type.dependency.Dependency and its subtypes. The main type has three features: Governor (points a segmentation.type.Token), Dependent (points a segmentation.type.Token), and DependencyType (string, holds an unmapped dependency type). Common representation of dependency parse tree are built by subtypes that inherit the type. Each subtype represents mapped dependency, like type.dependency.INFMOD, type.dependency.IOBJ, etc. To see all mapped common dependency types, see DKPRO.syntax.type.dependency.*.

Coreferences are represented with DKPRO.coref.type.CoreferenceLink. It is a UIMA annotation type, and represents link between two annotations. The type holds next feature that points another type.CoreferenceLink, and a string feature that holds the referenceType (unmapped output). Currently it does not provide common type on coreference type. CoreferenceLink represent single link between nodes. They will naturally form a chain, and the start point of such chain is represented by DKPRO.coref.type.CoreferenceChain.

This subsection describes types related to represent semantic role labels. It consists of two types, EXCITEMENT.semanticrole.Predicate and EXCITEMENT.semanticrole.Argument.

Predicate is a uima.tcas.Annotation. It represents a predicate. It holds the predicate sense as string and links to its arguments (An array of Argument). It has the following features:

predicateName(uima.cas.String): This feature represents the name of this predicate. It actually refers to the sense of the predicate in PropBank or FrameNet.arguments(uima.cas.FSArray): This feature is an array ofEXCITEMENT.semanticrole.Argument. It holds the predicate's arguments.

Argument is a uima.tcas.Annotation. It represents an argument. It has two features; the argument's semantic role (type), and a backward reference to the predicate that governs the argument. They are:

argumentName(uima.cas.String): This feature represents the semantic role (type) of this argument. The fact that this feature is a String means that arbitrary role labels can be adopted, such as the PropBank argument set (A0, A1, AM-LOC, etc.), the FrameNet role set (Communication.Communicator, Communication.Message, etc.), or any other.predicates(uima.cas.FSArray): This feature is an array ofEXCITEMENT.semanticrole.Predicate. (Backward) references to the predicates which governs it.

Both annotations are applied to tokens (same span to tokens), and the semantic dependencies are implicitly recorded in the FSArrays. The reason to keep the FSArray for both side is that the redundancy makes the later processing easier (e.g. it is easier to find the corresponding predicates from each argument).

The temporal expression types are needed for event models, and

they are used in event-based EDAs. This section defines the related

types. One is TemporalExpression and the other is

DefaultTimeOfText and its subtypes.

EXCITEMENT.temporal.DefaultTimeOfText is a

uima.tcas.Annotation. It is anchored to a textual region

(a paragraph, or a document), and holds the "default time" that has

been determined for this passage and can be useful to interpret

relative time expressions ("now", "yesterday") in the text. It has one

feature:

time(uima.cas.String): This feature holds the default time for the textual unit which is annotated by this annotation. The time string is expressed in the normalized ISO 8601 format. More specifically, it is a concatenation of the ISO 8601 calendar date and extended time: "YYYY-MM-DD hh:mm:ss".

The second type, EXCITEMENT.temporal.TemporalExpression

annotates a temporal expression. It is a

uima.tcas.Annotation that annotates a temporal expression

within a passage, adding a normalized time representation. It holds

two string features: One contains the original temporal expression,

and the other contains a normalized time representation, using

ISO 8601 as above.

text(uima.cas.String): This feature holds the original expression appearing in the text.resolvedTime(uima.cas.String): This feature holds the resolved time in ISO 8601 format. For example, "Yesterday", will be resolved into "2012-11-01", etc. See the type DefaultTimeOfText for details.

temporal.TemporalExpression has four subtypes,

which closely reflects TIMEX3 classification [TIMEX3] of temporal expression. They are:

temporal.Date, temporal.Time,

temporal.Duration, and temporal.Set.

A simple annotation type is provided for text

alignment. EXCITEMENT.alignment.AlignedText represent an

aligned textual unit. It is a uima.tcas.Annotation. Its

span refers to the "source" linguistic entity. This can be a token

(word alignment), a syntax node (phrase alignments), or a sentence

(sentence alignment). It has a single feature that can point other

instances of of AlignedText:

alignedTo(uima.cas.FSArrayof typeEXCITEMENT.alignment.AlignedText): This feature holds references to otherAlignedTextinstances. Note that this array can have null, one, or multipleAlignedText.alignmentType(uima.cas.String): This feature gives additional information about the alignment ("type" of the alignment) as a string. The feature can be null, if no additional information is available.

The alignedTo feature is an array that can contain

multiple references, which means that it is one-to-many

alignment. Likewise, a null array can also be a valid value for this

feature, if the underlying alignment method is an asymmetric one;

empty array means that this AlignedText instance is a

recipient, but it does not align itself to other text.

This section outlines the types proposed for textual entailments and related sub-tasks. Full listing of the mentioned types are listed in Appendix B, Type Definition: types related to TE tasks .

The basic approach of the platform is to use a single CAS to represent a single entailment problem. The CAS holds text, hypothesis and associated linguistic analysis annotations.

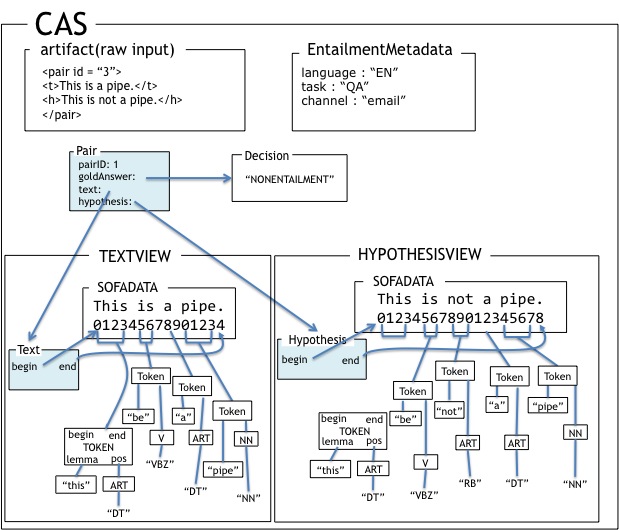

Let's first consider Figure 4, “Example of an entailment problem in CAS” which shows an

example of entailment problem represented in a. It includes UIMA CAS

elements like artifact, view, and SOFA (cf. Section 3.3.1, “Relevant UIMA concepts: Artifact, View, SOFA”). The original raw input is shown in the

figure as the artifact. It is a simple XML annotation with a T-H

pair. The artifact contains two plain-text subjects of analysis

(SOFA). Analysis tools like taggers will only see this SOFA data,

instead of the artifact XML. All annotations (all types that inherits

uima.tcas.Annotation) are annotated on top of the

SOFA. For example, the first token annotation of the text

("this") is annotated on TextView SOFA position 0 to

position 3. This SOFA positional span underlies the most common way of

accessing fetching UIMA text annotations, namely to retrieve, e.g.,

"all annotations of type Token from the TextView SOFA span 0 - 14".

Each view has exactly one SOFA. In this example, each view is enriched by token annotations

and POS annotations. Both token and POS are annotation types, and have

begin and end fields, which shows the span

of the annotation. (POS types also have begin and end span. They are

omitted here for clarity.)

The figure also shows a set of entailment specific annotation types:

they are entailment.Pair, entailment.Text,

entailment.Hypothesis, and

entailment.EntailmentMetadata.

The example has a single entailment.Pair, which points

entailment.Text and

entailment.Hypothesis. The Pair has an ID,

and it also has a goldAnswer. The CAS has no additional

pair, and the entailment problem described in this example is a single

T-H pair. There is one entailment.EntailmentMetadata in

this CAS. It shows that the language of the two views is "EN" (English

as defined in [ISO 639-1]), and the task is "QA". It

also has a string for channel value as "email".

The following subsections define the annotations in detail.

EXCITEMENT.entailment.EntailmentMetadata provides

metadata for each entailment problem. It is an extension of

uima.cas.TOP. An instance of this type should be indexed

directly on the CAS (not on TextView or

HypothesisView).

language (uima.cas.String): this string represents the language of the CAS and its entailment problem. It holds two character language identifier, as defined in [ISO 639-1]).task (uima.cas.String): this string holds task description which can be observed in the RTE challenge data.channel (uima.cas.String): this metadata field can holds a string that shows the channel where this problem was originated. For example, "customer e-mail", "online forum", or "automatic transcription", "manual transcription", etc.origin (uima.cas.String): this metadata field can hold a string that shows the origin of this text and hypothesis. A company name, or a product name.TextDocumentID (uima.cas.String): This field can hold a string that identifies the document of theTextView. This feature must have a value, ifTextCollectionIDis not null.TextCollectionID (uima.cas.String): This field can hold a string that identifies the collection name where the document of theTextViewbelongs to.HypothesisDocumentID (uima.cas.String): This field can hold a string that identifies the document of theHypothesisView. This feature must have a value, ifHypothesisCollectionIDis not null.HypothesisCollectionID (uima.cas.String): This field can hold a string that identifies the collection name where the document of theHypothesisViewbelongs to.

The values of these fields are unrestricted Strings. Pipeline users are responsible for documenting the semantics of the values if they want to ensure interoperability.

Text-Hypothesis pairs are represented by the type

EXCITEMENT.entailment.Pair. The type is a subtype of

uima.cas.TOP. An instance of Pair should be

indexed directly on the CAS (not on TextView or

HypothesisView). If the CAS represents multiple text

and/or hypothesis, the CAS will holds multiple Pair

instances.

The type has following features:

pairID (uima.cas.String): ID of this pair. The main purpose of this value is to distinguish a certain pair among multiple pairs.text (EXCITEMENT.entailment.Text): this features points the text part of this pair.hypothesis (EXCITEMENT.entailment.Hypothesis): this features points the hypothesis part of this pair.goldAnswer (EXCITEMENT.entailment.Decision): this features records the gold standard answer for this pair. If the pair (and CAS) represents a training data, this value will be filled in with the gold standard answer. If it is a null value, the pair represents a entailment problem that is yet to be answered.

EXCITEMENT.entailment.Text is an annotation

(extending uima.tcas.Annotation) that annotates a text

item within the TextView. It can occur multiple times

(for multi-text problems). It does not have any features. A

Text instance is always referred to by a

Pair instance.

Note that Text annotations and TextView are

two different concepts. A TextView is a container that

contains a SOFA plus all of its annotations, including

entailment.Text

Thus, the fact that a sentence forms part of a TextView

does not automatically mean that it is compared to a hypothesis. This

only happens if it is annotated with entailment.Text and

linked with entailment.Pair.

The situation is similar for

EXCITEMENT.entailment.Hypothesis. It is also an

annotation (uima.tcas.Annotation) that annotates a

hypothesis item within the HypothesisView. It can occur

multiple times (in multiple-hypothesis cases). It does not have any

features either. A Hypothesis instance is always referred

to by a Pair instance. Hypothesis

annotations and HypothesisView are different entities,

and sentences are only compared to a specific text annotation when

they are annotated with entailment.Hypothesis, like for

texts.

The EDA process interface receives CAS data as a

JCas object (the UIMA-created Java type hierarchy corresponding to the

UIMA CAS types). CASes that feed into the EDA process

interface must have at least a single entailment.Pair.

Naturally, each view (TextView and

HypothesisView) must have at least one

entailment.Text and entailment.Hypothesis.

Multiple text and multiple hypothesis cases will be represented by

multiple number of entailment.Pair. Note that the

relationship between pairs on one side and texts and hypotheses is not

a one-to-one relationship. Several pairs can point the same

hypothesis, or to the same text. For example, if one hypothesis is

paired with several potential texts, there will be multiple pairs. All

pairs will point to the same hypothesis, but to different texts.

EXCITEMENT.entailment.Decision represents the

entailment decision. It is a string subtype, which is a string

(uima.tcas.String) that is only permitted with predefined

values. entailment.Decision type can only haves one of

"ENTAILMENT", "NONENTAILMENT", "PARAPHRASE", "CONTRADICTION", and

"UNKNOWN" (The type can be further expanded in the future). This UIMA

type has a corresponding Java enum type within the entailment core

(see Section 4.2.1.6, “enum DecisionLabel”).

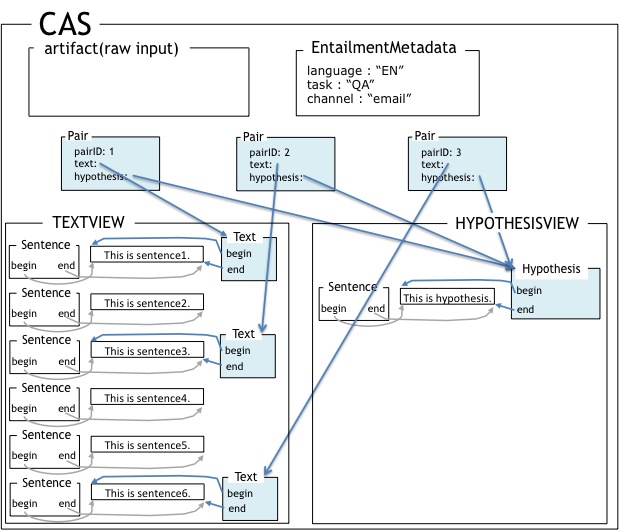

Figure 5, “Example of an entailment problem with multiple pairs” shows another example of a CAS with

entailment types. In this figure, the CAS has multiple pairs. The

TextView holds a document with six sentences. All six sentences are

annotated by sentence splitter, and additional analyzers (for clarity,

only sentence annotations are shown). Among the analyzed sentences,

the entailment.Text annotations are marked on the first,

third and sixth sentences. The HypothesisView holds only a single

sentence, which is the single Hypothesis annotation. The CAS holds

three pairs, and it is a multiple text entailment decision problem. In

the figure, linguistic analysis annotations other than sentences are

omitted for clarity. The sentences that are not annotated with a

Text annotation can be regarded as context for the

annotated texts. For example, co-reference resolutions will take

benefits from the sentences. Also, components like distance

calculation components, or text rewriting components can use the

tokens in the context sentences to get better results. (Note that

generic components like tokenizer, parser, and co-reference resolver,

do not aware of entailment specific annotations, and process all

data in the given view. "Generic" verses "TE specific" LAP component

is described in the next subsection Section 3.3.4.3, “Two groups of LAP components”.)

Note that EDAs will only decide entailment of pairs that are

explicitly marked with Pair annotations. Existence of

text or hypothesis annotation does not automatically lead to

processing in an EDA. For example, if the pair-2 is missing from the

figure, the Text annotated on sentence-3 will not be

compared to the hypothesis sentence. Also note that nothing stops us

from annotating a text item that overlaps with other text items. For

example, it would be a legal annotation if we add a new text item that

covers from sentence-2 to sentence-4 (which will include the text of

pair-1).

All EXCITEMENT LAP components can be divided into two groups:

generic analysis components

TE specific components

The most important difference between the two groups is awareness of

the two views (the TextView and the

HypothesisView as introduced in Section 3.3.4.1.3, “entailment.Text,

entailment.Hypothesis and

entailment.Decision ”). Only the second group knows

about the views, and also the entailment specific annotations (like

entailment.Metadata). General LAP components (like

taggers and parsers) only process a view. Keeping generic analysis

tools as single view annotators is intentional and well-established

UIMA practice. It enables the tools as generic as possible. In our

case, user level or transduction layer can call analysis components

not only on TE problems, but also on any textual data.

Pipelines with two (or more) views use generic AEs to annotate one of its views. If a pipeline needs to annotate all of its views, it needs to call the generic LAP component multiple times. For instance, a typical pipeline that produce a CAS for a EDA looks like this:

collectionReader reads a TE problem from the text format (like that of Section 5.2, “Input file format ” )

collectionReader generates a CAS with two views, entailment.metadata, and entailment.pair.

runtime calls a generic AE (or AAE) on the Text View (for example, a Tagger+Parser AE/AAE)

runtime calls a generic AE (or AAE) on the Hypothesis View (same Tagger+Parser AE/AAE)

runtime calls a TE specific AE on the CAS (e.g. a TE-specific alignment annotator)

the CAS is ready and passed to the entailment core.

Note that generic analysis annotators are called twice for once on TextView and once on HypothesisView (step 3 and 4), while TE-specific tool (step 5) is called only once. Also note that this is only one example, and a whole set of different processing pipelines can be designed. For example, to process a big set of short texts, we can imagine a pipeline that first call taggers for all problems as a single document and then generate multiple CASes for efficiency reasons, etc.

Ordinary annotators (taggers, parsers, etc) do not (and should

not) know about the views of TextView and

HypothesisView. They only see one view at a time, its

SOFA as a single document. Thus the two views of T and H naturally

represent two separate documents, one for text and one for hypothesis.

One problem of this setup is that we have to process other cases like:

Text (or Hypothesis) from Multiple documents.

Text and Hypothesis on the same document.

The following subsections describe how we will represent such cases with the proposed types and CAS.

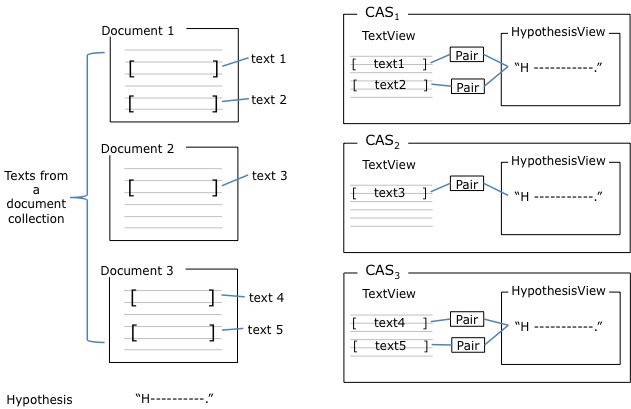

Some problems involve multiple documents. Examples are the main task of RTE6 and RTE7. They have TE problem that consists of multiple texts (T) and a single hypothesis. The texts are scattered over a set of documents (collection). Additionally, there are global features associated with the document collection (e.g., topic).

We can break down this case with multiple CASes with the proposed CAS representation. The following figure shows one artificial example. In the figure, it has a single hypothesis and multiple texts. The texts are located in three different documents. It is possible to represent this problem with three CASes.

Each CAS of the decomposed one will look like Figure 5, “Example of an entailment problem with multiple pairs”. Note that the multi document example is decomposed into several CASes whose number corresponds to the number of documents. Each view holds a single document in this setup, and normal annotators can process each view just as a normal document.

EXCITEMENT.entailment.EntailmentMetadata holds two

additional features for multiple document cases. It has

documentID and collectionID for both text

and hypothesis. Document ID identifies the document assigned in the

view, and collection ID can show the collection where the document is

belong to.

Note that this decomposition assumes that breaking down the multiple document case into multiple single document problem does not change the final output. See Section 3.3.4.4.3, “More complex problems and their use-cases ” for more complex cases of multiple text/hypothesis.

Since we have two separate views for text and hypothesis, it can be problematic when we have the text and the hypothesis from the same document.

In such a case, we will have two views with the same document (two views have the same document with same analysis result). All other TE related annotations stays normal, like metadata and entailment problem annotation. Only document specific annotations (like tagging, parsing) are duplicated into two views.

Note that this "duplicated analysis result in two views" does not mean the analysis of the document has to be done twice. We can setup a special pipeline for single document T-H case, where it first runs analysis and copies the result into two views.

Note that the above two mappings (multiple documents THs and single

TH) simplify EDA interfaces. For EDAs, all inputs look alike with two

views with entailment.Pair type annotations.

However, we can imagine more complex use cases where no trivial mapping

is possible. Within the WP3 discussions, the following tasks have been

discussed for possible future support.

Text expansion: Automatically generating a (large) set of additional texts that can entail the given input text.

Dialog system: Ranking hypothesis for the given text. The goal is to rank, instead of decision.

Hypothesis with variables: Hypothesis that includes variables, where the variables can be replaced by parts (like words) that appears in the text.

For such cases, we will probably additional interfaces on EDA side, also with new problem descriptions. However, this version of specification only deals with existing, well-known RTE problem sets. The WP3 members have agreed that additional use-cases will only be discussed in a future version of the specification.

This subsection describes types related to the representation of predicate truth values. Predicate truth annotation is an annotation that provides truth values for all predicates and clauses by traversing the parse tree and taking into account implications, presuppositions, negations, clausal embeddings, and more. See [PredicateTruth] for details.

We need four annotation types according to the annotations that

are needed for predicate truth annotator. They are

PredicateTruth, ClauseTruth,

NegationAndUncertainty, and

PredicateSignature. They add annotations to

tokens. ClauseTruth can annotate a set of consecutive

tokens, while other types only annotate a single token.

EXCITEMENT.predicatetruth.PredicateTruth is a uima.tcas.Annotation. It represents a predicate truth value annotation. It has the following feature:

value(PredicateTruthValue, a string subtype): This represents the value of the annotation. The value type subtype of string permitting only the valuesPT+,PT-, andPT?.

EXCITEMENT.predicatetruth.ClauseTruth is a uima.tcas.Annotation. It represents a clause truth value annotation. Note that the begin and end of this annotation can span more than a token. It has the following feature:

value(ClauseTruthValue, a string subtype): This represents the value of the annotation. The value type is a subtype of string permitting only the valuesCT+,CT-, andCT?.

EXCITEMENT.predicatetruth.NegationAndUncertainty is a uima.tcas.Annotation. It represents a negation-and-uncertainty annotation. It has the following feature:

value(NegationAndUncertaintyValue, a string subtype): This represents the value of the annotation. The value type is a subtype of string permitting only the valuesNU+,NU-, andNU?.

EXCITEMENT.predicatetruth.PredicateSignature is a uima.tcas.Annotation. It represents an implication signature. It has the following feature:

value(PredicateSignatureValue, a string subtype): This represents the value of the annotation. The value type is a subtype of string permitting only the values "+/-", "+/?", "?/-", "-/+", "-/?", "?/+", "+/+", "-/-", "?/?".The implementation uses a set of more fine grained values, that may grow in the future.

PredicateSignatureValuewill be extended and modified accordingly

It is possible that users want to add additional information to entailment problems. For example, task-oriented TE modules might want to include additional information such as ranks among texts, relevance of each hypothesis to some topics, etc.

The canonical way to represent such information is to extend the

entailment.Pair and related types. The type and related

types (like MetaData, Text and

Hypothesis, etc) are presented to serve the basic need of

the EXCITEMENT platform, and additional data can be embedded into CAS

structure by extending the basic types.

Naturally, the extension should be performed in a consistent manner. This means that the implementer can only define a new type that is extending the existing types, and may not change already existing types. Also, it is recommended that attributes and methods inherited from the existing types should be used in the same way so that components and EDAs which are unaware of the extensions can still can operate on the data.

The type EXCITEMENT.entailment.Decision is also open for

future extensions. When we define a new relation between text and

hypothesis, this type should be first extended to cover the new

relation. (This extension should always done with the extension of

internal DecisionLabel enumeration, see Section 4.2.1.6, “enum DecisionLabel”.

Unlike EXCITEMENT types, generic types (of Section 3.3.3, “Types for generic NLP analysis results”) should in general not be extended.

Exceptional cases may arise, though, for example when an EDA utilizes

some additional information of a specific linguistic analysis tool. In

such a case, one may choose to extend generic types to define special

types (for example, defining myNN by extending NN, etc). The EDA can

then confirm the data is processed by a specific tool (like existence

of myNN) and can use the additional data that is only available in the

extended type. Other modules that do not recognize the extended types

are still able to use the super-types in the output (in the example,

myNN will map onto NN).

It would be ideal if each linguistic analysis module (like POS taggers, parsers or coreference resolvers) were provided as a generic individual UIMA analysis engine (AE). With individual AEs, user code would be able to call these modules as UIMA components, as described in Section 3.2.2, “The EXCITEMENT linguistic analysis pipeline”.

By generic, we mean they are supposed to process normal text and not T/H pairs. The modules are not supposed to process or even be aware of annotations related to entailment problems. This ensures their reusability. The necessary specific processing for entailment data (H/T markup) should be implemented within a complete pipeline, cf. the following subsection.

As described in Section 3.3.5, “Extending existing types”, all analysis engines must annotate the given data with the common type system. In the rare case where the provided common type system is not sufficient, the implementer must not modify any of the existing types and should only expand existing types with an own type that inherits existing types.

It is recommended that the implementer should reuse existing AEs as much as possible. For example, DKPro already provides many of the well known NLP tools including major parsers and taggers for various languages. ClearTK or OpenNLP also ships various NLP tools as UIMA components. ClearTK or OpenNLP uses different type systems, thus one needs to add additional glue code to map the types into adopted DKPro types. However, such mappings are generally trivial and should not pose large problems.

Note that there is more than one flavor in providing UIMA components. This specification only defines types and components in terms of the bare UIMA framework. Each component is supposed to have its component description, and should be runnable by the UIMA framework. However, the implementers are free to utilize additional tools. For example, DKPro and ClearTK both uses UIMAFit as tool layer, which provides the functionalities of the UIMA framework with additional tool layers. The implementer may utilize such tools, as long as the end results are compatible with what are defined by this specification.

In some cases, one may choose NOT to provide individual analysis components as UIMA components. See Section 3.4.4, “An alternative: Independent pipelines with CAS output” for such a case.

Individual analysis engines (AEs) are generic. They process a single input (single CAS) and add some annotation layers back to CAS. As mentioned above, however, EDAs require more the sum of single AE annotations, namely T-H views, and T-H pair annotations. Thus, it is desirable to provide complete "pipelines" that is properly annotated with types needed for textual entailment.

A pipeline for EXCITEMENT EDA must process the followings:

The end result of the pipeline should generate the CAS as an entailment test case, as described in Section 3.3.4, “Additional Types for Textual Entailment”.

It should be able to process input of formatted text that follows the EXCITEMENT test data format, defined in Section 5.2, “Input file format ”.

All linguistic analysis annotations should follow the adopted type system (of Section 3.3.3, “Types for generic NLP analysis results”), or some extension of the adopted types.

EDA implementers must provide at least a single pipeline that produces suitable input for their EDA, or declare that an already existing pipeline as the suitable pipeline. If the EDA supports additional input mode like multiple texts/hypotheses, the implementer should provide a corresponding pipeline that produce multiple text/hypothesis.

Pre-processing of a set of document (collection) is often needed for NLP applications. In textual entailment context, a pre-processed set of training/testing data can be beneficial for various cases.

EDA implementers should provide the collection pre-processing capability.

It must process a collection of documents stored in a path.

The end result for each file process must generate the CAS as an entailment test case, as described in Section 3.3.4, “Additional Types for Textual Entailment”. The CAS should be stored in a path as the XMI serialization.

Each stored CAS data must be identical to the output of the corresponding pipeline.

It should be able to process input of formatted text that follows the EXCITEMENT test data format, defined in Section 5.2, “Input file format ”.

All linguistic analysis annotations should follow the adopted type system (of Section 3.3.3, “Types for generic NLP analysis results”), or some extension of the adopted types.

Note that XMI (XML Media Interchange format) serialization is the standard serialization supported by UIMA framework. It stores each CAS as an XML file, and the stored files can be easily deserialized by UIMA framework utilities. You can find additional information about CAS serialization in [UIMA-ser]

It is recommended that individual analysis components are supplied as UIMA analysis engine (AE). This will make sure those analysis modules can be used by top level users in various situations, as suited for the need of application programmers.

However, there can be situations where EDAs depend on specialized analysis modules which form a pipeline that cannot easily be divided into individual UIMA analysis engines. For example, assume that there is a parser and a coreference resolution system that are designed to work together to produce a specific output for a given EDA. Then, it might not be desirable to break them into individual analysis engines. In such a case, one may choose to provide only the pipeline as a single analysis engine which translates the final output of the pipeline into the common representation format.

Even in these cases, the developers should provide the pipeline as an UIMA component. This will ensure that the pipeline can be called in an identical manner to normal pipelines.

This section defines the interfaces and types of the Entailment Core. Section 4.1 provides a list of requirements and some methodological requirements. The remaining subsections contain the actual specification.

Entailment recognition (classification mode). The basic functionality that EDAs have is to take an unlabeled text-hypothesis pair as input and return an entailment decision.

Entailment recognition (training mode). Virtually all EDAs will have a training component which optimizes its parameters on a labeled set of text-hypothesis pairs.

Confidence output. EDA should be able to express their confidence in their decision.

Support for additional modes. The platform should support the processing of multiple Hs for one T, multiple Ts for one H, as well as multiple Ts and Hs.

A small set of reusable component classes. The specification should describe a small set of component classes that provide similar functionality and that are sufficiently general to be reusable by a range of EDAs.

Interfaces and types for component classes. The specification should specify user interfaces for these component classes, including the types used for the exchange of information between components and EDA.

Further extensibility.Users should be able to extend the set of components beyond the standard classes in this specification. This may however involve more effort.

To determine the set of reusable components and their interfaces, we started from the three systems that we primarily build on and followed a bottom-up generalization approach to identify shared functions (components), interfaces and types. The results are discussed in Section 4.1.3, “Identifying common components and interfaces ”. Further functionality of these components (e.g. details of their training regimens) are not be specified but in the scope of the component's developer

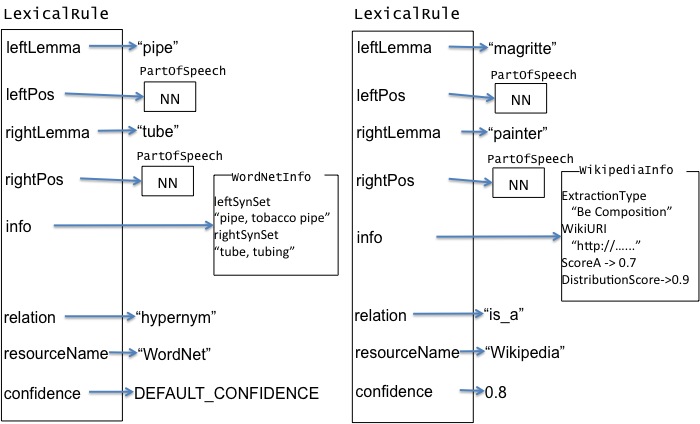

By following the bottom-up approach, we have identified a set of major common interfaces. The following figure shows the common components within the entailment core.

The top-level interfaces of the entailment core consist of the EDA interface and the mode helper interface. EDAs are the access points for entailment decision engines. They receive annotated input in the form of CAS, and return a decision object. The EDA interface also includes support for multiple Text and/or multiple Hypothesis modes. The EDA interfaces are described in Section 4.2, “EDA Interface ”. The mode helper is a wrapper tool that supports multiple text and multiple hypothesis mode for EDAs that can only process single T-H pairs. Its interface is described in Section 4.3, “Auxiliary Layer for EDAs”.

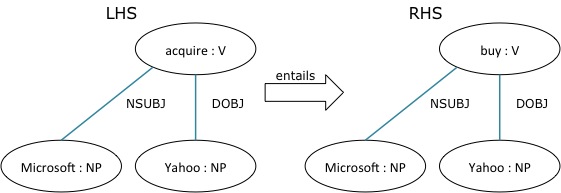

We have identified three main classes of components that EDAs might wish to consult. The first type is distance calculation components. EDAs can use the semantic distance between T-H pairs as their primary decision factor, or can use them as features in more general decision algorithms. The interface of distance calculation components specifies that they accept a CAS as the input, and return an object the distance between the two views of the CAS. The interface is described in Section 4.6, “Interface of distance calculation components”.

The second and third component classes deal with different kinds of linguistic knowledge, namely lexical knowledge and syntactic knowledge. Lexical knowledge components describe relationships between two words. Various lexical resources are generalized into EXCITEMENT common lexical knowledge interface. The interface is described in Section 4.7, “Interface of lexical knowledge components ”. Syntactic knowledge components contain describes entailment rules between two (typically lexicalized) syntactic structures, like partial parse trees. For entailments, the knowledge can be generalized into rewriting rules at partial parse trees, where left hand sides are entailing the right hand sides. The common interface for syntactic knowledge is described in Section 4.8, “Interface of syntactic knowledge components ”.

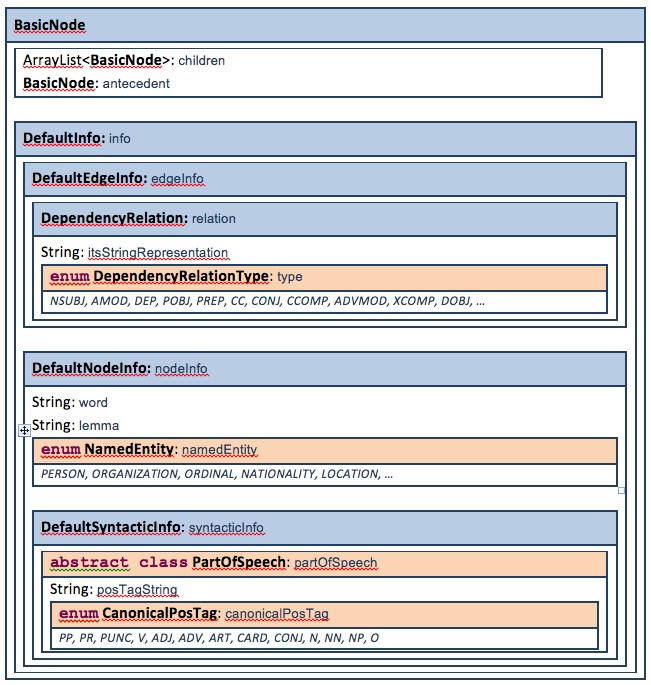

The EXCITEMENT platform uses UIMA CAS type system for the linguistic analysis pipeline (Section 3). The UIMA types are expressive enough to describe the relevant data structures, and can be used both in-memory and serialized. It is thus a natural question whether CAS types can be re-used for the communication between EDAs and components.

Our general guideline within the Entailment Core is that the interfaces are supposed to use UIMA CAS only when a complete text (Text or Hypothesis, potentially with context) is exchanged as a parameter. The reason is that even though CAS types (JCas objects) are expressive enough to describe any data, they are basically flat structures --- tables with references. This limits their capability to be used efficiently. A second reason is that JCas objects only works within CAS context. Even simple JCas objects like tokens need a external CAS to be initialized and used. This makes it impractical to use JCas objects and their types in interfaces that do not refer to complete texts (which are modeled in CASes). To repeat, we use JCas and related types only when a reference to the whole text is exchanged. Furthermore, we only exchange references to the top-level JCas object and never to any embedded types like views or annotation layers.

A second aspect that must be handled with care is the extension of JCas objects. JCas provides the flexibility to extend objects while keeping the resulting objects consistent with the original JCas types. However, this can cause duplicated definitions when mismatches are introduced. Moreover, CAS types will be used and shared among all EXCITEMENT developers which makes extensions introduced by one site problematic. For these reasons, our policy is that JCas objects should not be extended.

public interface EDABasic<T extends TEDecision>

The common interface of EDA is defined through two Java

interfaces. EDABasic interface defines methods related to

single T-H pair process and training. EDAMulti*

interfaces defines additional optional interfaces for multiple text

and/or hypothesis cases.

This interface defines the basic capability of EDAs that all

EDAs must support. It has four methods. process is main

access method of textual entailment decision. It uses

JCas as input type, and TEDecision as output

type. The interface as Java code is listed in Section C.1, “interface EDABasic and related objects”.

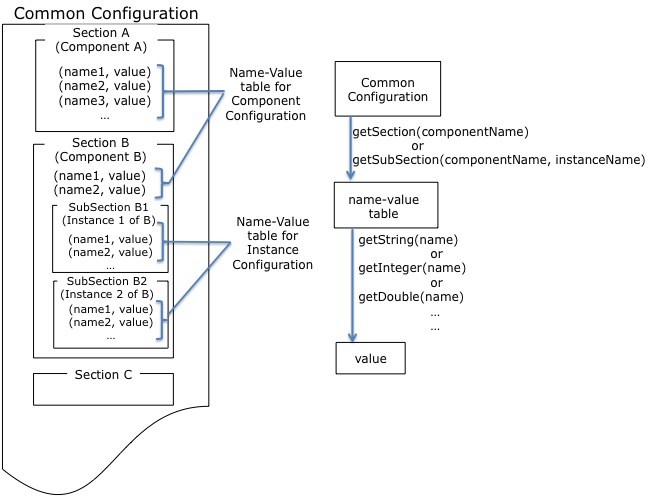

public void initialize(CommonConfig config)

This method will be called by the top level programs as the signal for initializing the EDA. All initialization of an EDA like setting up sub components and connecting resources must be done in this method. An EDA implementation must check the configuration and raise exceptions if the provided configuration is not compatible.

initialize is also responsible for passing the

configuration to common sub-components. At the initialization of core

components (like distance calculation components or knowledge resource

components), they will raise exceptions if the configuration is not

compatible with the component. initialize must pass

through all exceptions that occurred in the subcomponent

initialization to the top level.

The recommended meta data checking policy for the EDA and the core components is described in more detail in Section 4.10.1, “Recommended policy on metadata check”.

Parameters:

config: a common configuration object. This configuration object holds platform-wide configuration. An EDA should process the object to retrieve relevant configuration values for the EDA. Common configuration format and its in-memory object is defined in section Section 5.1, “Common Configuration ”.

public T process(JCas aCas)

This is the main access point for the top level. The top level

application can only use this method when the EDA is properly

configured and initialized (via initialize()).

Each time this method is called, the EDA should check the input for

its compatibility. Within the EXCITEMENT platform, EDA implementations

are decoupled with linguistic analysis pipelines, and you cannot

blindly assume that CAS input is valid. EDA implementation must check

the existence of proper annotation layers corresponding to the

configuration of the EDA.

The TE decision is returned as an object that extends

TEDecision interface which essentially holds the decision

as enum value, numeric confidence value and additional info.

Parameters:

aCas: a JCas object. Which has two named views (TextView and HypothesisView). It has linguistic analysis result withentailment.Pairas defined in Section 3.3.4, “Additional Types for Textual Entailment”.

Returns:

T extends TEDecision: An object that extendsTEDecisioninterface. See Section 4.2.1.5, “interfaceTEDecision”.

public void shutdown()

This method provides a graceful exit for the EDA. This method will be called by top-level as a signal to disengage all resources. All resources allocated by the EDA should be released when this method is called.

public void startTraining(CommonConfig c)

startTraining interface is the common interface for EDA